In today’s fast-evolving technological landscape, the demand for scalable, efficient, and portable software solutions has never been higher. As organizations increasingly adopt cloud-based and distributed systems, the challenges of ensuring software consistency across different environments have become more pronounced. This is where Docker and containerization emerge as game-changing solutions, revolutionizing how software is developed, deployed, and managed. In this article, we will explore the intricacies of Docker and containerization, understand why they are essential, and examine their impact on modern software development.

Understanding Docker: The Basics

Docker is an open-source platform designed to simplify the creation, deployment, and management of applications within lightweight, portable, and self-sufficient environments known as containers. At its core, Docker provides tools to package an application along with its dependencies—such as libraries, frameworks, and runtime—into a single, standardized unit.

Key Components of Docker:

- Docker Engine: The runtime that enables the creation and management of containers. It consists of the Docker Daemon, REST API, and CLI (Command Line Interface).

- Docker Daemon: A background service that manages Docker objects such as images, containers, and networks.

- CLI: The command-line interface used to interact with Docker, issue commands, and control containers.

- Docker Images: Immutable templates that serve as the blueprint for creating containers. These images include the application code, dependencies, and configuration settings required to run the application. Developers can either build their own images or pull pre-configured images from repositories like Docker Hub.

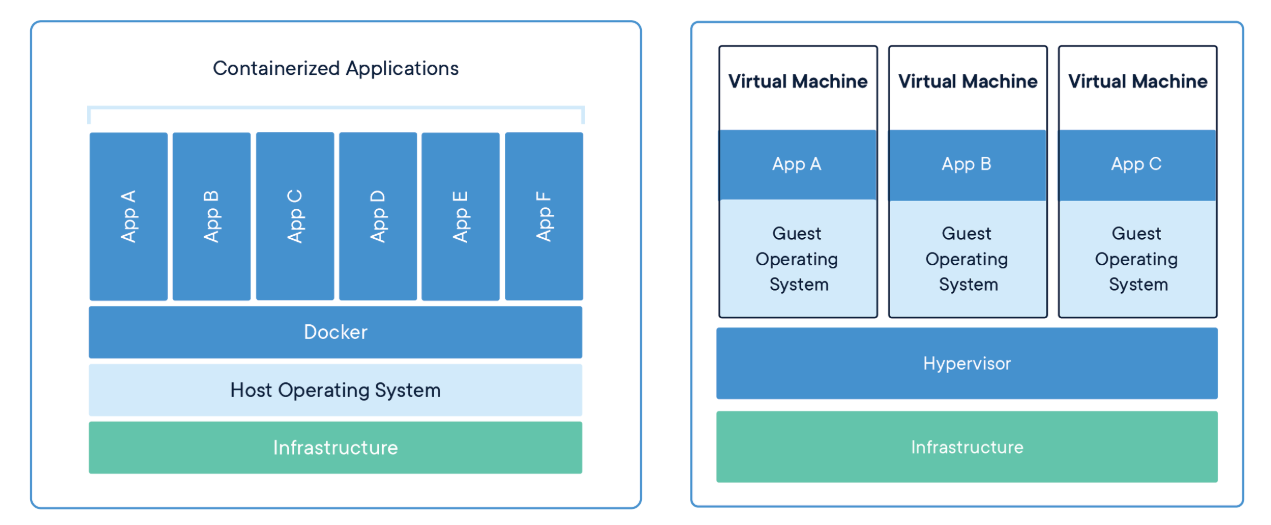

- Docker Containers: Lightweight, standalone units that encapsulate an application and its dependencies. Containers run from Docker images and share the host system’s OS kernel, ensuring efficiency and reducing overhead compared to virtual machines. Containers can be easily started, stopped, or replicated, providing unmatched flexibility in managing workloads.

- Docker Hub: A cloud-based registry where users can find, share, and store Docker images. It serves as a marketplace for pre-configured container images, simplifying the development process and encouraging collaboration.

- Docker Compose: A tool for defining and running multi-container Docker applications. With a simple YAML file, users can configure multiple services, networks, and volumes, making it easier to manage complex applications.

- Docker Swarm: A native clustering and orchestration tool for Docker. It enables users to create and manage a cluster of Docker nodes, providing load balancing, high availability, and scalability for containerized applications.

- Docker Registry: A storage and distribution system for Docker images. Organizations can host their private registries to maintain control over proprietary images and streamline their CI/CD workflows.

- Docker Volumes: A mechanism for persisting data generated by containers. Volumes allow data to be stored and shared between containers or across container restarts, ensuring stateful applications remain functional.

- Dockerfile: A text document that contains all the commands required to assemble a Docker image. Developers use Dockerfiles to automate the creation of custom images tailored to specific application requirements.

- Docker Networks: A system that allows containers to communicate with each other or external systems. Docker provides several networking modes, such as bridge, host, overlay, and macvlan, to suit various application needs. Example:

- Docker Secrets: A secure way to manage sensitive information, such as API keys, passwords, or certificates, in containerized applications. Docker secrets are encrypted and only accessible to containers with the appropriate permissions.

What is Containerization?

Containerization is a technology that enables the packaging of an application and its dependencies into a container. This container can run consistently across diverse computing environments, whether it’s a developer’s local machine, an on-premises server, or a public cloud.

How Containerization Works: Containers operate using the host system’s OS kernel but remain isolated from other containers and the host. This isolation is achieved through technologies like cgroups (control groups) and namespaces in Linux, which manage resource allocation and provide separate execution environments for each container.

The Importance of Docker and Containerization

1. Solving the “Works on My Machine” Problem

One of the most common challenges in software development arises when applications behave differently in development, testing, and production environments. These inconsistencies often result from variations in operating systems, software versions, or missing dependencies. Docker eliminates this issue by bundling applications with everything they need to run, ensuring consistency across environments.

2. Portability

Docker containers are portable and can run on any system that supports Docker—be it a developer’s laptop, an enterprise data center, or a cloud service. This cross-platform compatibility simplifies collaboration among teams and streamlines deployment processes.

3. Resource Efficiency

Unlike traditional virtual machines (VMs) that require a complete OS for each instance, containers share the host system’s OS kernel. This approach significantly reduces resource consumption, enabling faster startup times and higher density on a single host machine.

4. Scalability

Containers are inherently lightweight and can be quickly started or stopped. This makes them ideal for scaling applications dynamically based on demand. Docker integrates seamlessly with orchestration tools like Kubernetes, which automate the scaling and management of containerized applications.

5. Simplified Development and CI/CD Pipelines

Docker enhances developer productivity by providing a consistent environment across development, testing, and production. It also integrates seamlessly with Continuous Integration and Continuous Deployment (CI/CD) tools, enabling automated testing, building, and deployment of applications.

Docker in Action: Use Cases

1. Microservices Architecture

Docker is a cornerstone of microservices architecture, allowing developers to break down monolithic applications into smaller, independently deployable services. Each service runs in its own container, making it easier to develop, deploy, and maintain.

2. Cloud-Native Applications

Cloud-native development leverages the elasticity and scalability of cloud environments. Docker’s portability ensures that containerized applications can be deployed effortlessly on any cloud platform, supporting multi-cloud and hybrid cloud strategies.

3. Continuous Testing and Deployment

Docker containers provide isolated environments for running tests, enabling developers to perform unit tests, integration tests, and end-to-end tests without affecting other processes. This isolation ensures more reliable and faster CI/CD pipelines.

4. Legacy Application Modernization

Organizations often face challenges when modernizing legacy applications. Docker simplifies this process by encapsulating legacy applications in containers, making them portable and compatible with modern infrastructure.

Advantages of Docker and Containerization

- Consistency: By encapsulating an application and its dependencies, Docker ensures that the software behaves identically across all environments.

- Speed: Containers start almost instantly, enabling rapid deployment and scaling.

- Isolation: Each container operates independently, reducing the risk of conflicts between applications.

- Cost Efficiency: Containers maximize resource utilization, allowing organizations to run more applications on the same hardware.

- Community Support: Docker boasts a vast ecosystem and community, offering extensive documentation, pre-built images, and third-party tools.

Challenges and Limitations

While Docker and containerization offer numerous benefits, they also present certain challenges:

- Learning Curve: Developers and operations teams need to familiarize themselves with containerization concepts and tools.

- Security: Containers share the host OS kernel, which can introduce security risks if not properly managed. Best practices, such as using minimal base images and regularly updating containers, are essential.

- Networking Complexity: Managing container networking in large-scale deployments can be challenging, requiring expertise in tools like Kubernetes.

- Storage Management: Ensuring persistent storage for stateful applications in containerized environments can be complex.

The Future of Docker and Containerization

Docker and containerization are central to the future of software development. As organizations adopt DevOps practices and cloud-native strategies, the demand for scalable and portable solutions will continue to grow. Emerging trends such as serverless computing, edge computing, and AI-driven automation are further expanding the scope of containerization.

Additionally, the integration of Docker with advanced orchestration platforms like Kubernetes and OpenShift is streamlining the management of complex, distributed systems. This evolution is enabling organizations to achieve greater agility, resilience, and efficiency.

Conclusion

Docker and containerization have transformed the software development landscape, addressing key challenges related to consistency, scalability, and efficiency. By enabling applications to run seamlessly across diverse environments, Docker ensures that developers and organizations can focus on innovation rather than infrastructure. As technology continues to evolve, Docker’s role as a foundational tool for modern development practices is set to grow, solidifying its position as an indispensable asset in the digital era.